5 Catalogue

The catalogue service enables projects to create their own SpatioTemporal Asset Catalogue (STAC), which serves as a comprehensive metadata collection for efficient organization and discovery of geospatial data assets. This information is presented in a user-friendly JSON format.

Instantiating a project-specific catalogue gives projects the freedom to use it for a range of purposes, from experimental to operational use cases. Assets that are considered project deliverables will normally be added to the centralized project results repository.

STAC structures data around assets, collections, and catalogues, simplifying search and access based on attributes such as time, location, and other metadata. The concept of a STAC catalogue emphasizes simplicity, operating as a REST-based web service with a single HTTP endpoint for communication. This API-centric design ensures easy integration into existing applications and tools, including other APEx services.

5.1 Showcase Scenarios

The product catalogue serves several beneficial scenarios for projects:

- Data Accessibility: The service offers easy access to a wide range of geospatial data sources, enabling project teams to quickly locate and utilize relevant data without the need to manage large datasets locally.

- Tool Integration: By adhering to the STAC standard, the service enhances interoperability among different data sources, software tools and processing platforms such as openEO and OGC Application Packages. This ensures seamless integration of datasets from various providers, supporting comprehensive analysis and decision-making. This interoperability extends to other APEx services such as the Dashboard Service, Interactive Development Environments, User Workspaces,… .

- Efficient Search and Discovery: Users can efficiently search and discover datasets based on specific criteria such as time, location, and data type. This capability streamlines research, planning, and operational tasks by providing quick access to relevant information.

- Enhanced Collaboration: The service promotes collaboration by providing a centralized platform where project stakeholders can access and share geospatial data and analyses. This collaborative environment fosters innovation and knowledge sharing across disciplines and organizations.

5.2 User Stories

An ESA project wants to publish their ‘official’ project results into a shared catalog for long term preservation.

An ESA projects wants to have a catalog for intermediate or temporary results, mainly for internal use and visualization of temporary results.

An ESA project wants to administer their own catalog. They should be able to group relevant users into a ‘project_X_admin’ group, giving them the ability to create and modify collections.

As APEx operators, we want to ensure a backup of the database, to avoid loosing catalog data in case of disaster.

As APEx operators, we want to apply catalog security and bugfixes to all instances, in a cost-effective manner.

As APEx operators, we want to have observability on catalog response times, to be able to be notified of catalog instances that misbehave.

As APEx operators, we want to impose/define limits on catalog usage, to make sure that costs stay within the foreseen bounds, and to be notified when a project requires an upgrade to a larger package. This could be a limit on the number of daily http requests, or by configuring the pods with a certain size.

5.3 Business Model

We can define different flavours that may be relevant to our users:

- The ‘official’ ESA application project catalog for long term preservation. We expect the highest request volume for this instance. It does not need to be instantiated dynamically. Cloud costs can be covered by APEx project itself.

- A project specific catalog with shared database. This would probably be the cheapest option, and sufficient for the majority of projects.

- A project specific catalog with isolated database. This is a more costly option, which would only be needed for the most demanding projects.

Separating this into different flavours would allow us to give attractive options to projects of different sizes.

Note that it will be hard to anticipate the demand for this service. Therefore, it may be sufficient to start with having only the ‘official’ option, together with a second (shared) instance that can be used for testing purposes and intermediate results.

5.4 Technical Architecture

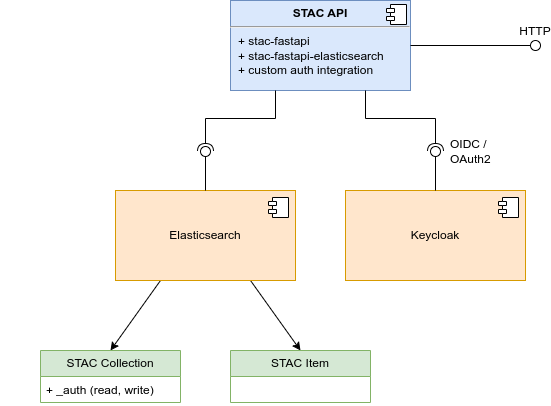

The product catalogue deployment consists of a web service pod and a managed database. The web service, based on STAC FastAPI, supports multiple implementations and integrates with various database technologies:

- https://github.com/stac-utils/stac-fastapi-elasticsearch-opensearch

- https://github.com/stac-utils/stac-fastapi-pgstac

STAC FastAPI has demonstrated robustness in running production-grade catalogues.

Based on previous experiences, the project team favours the Elasticsearch approach for database implementation. This choice minimizes the complexity of database tuning, which typically requires deep knowledge of the database software. Database tuning needs can vary depending on project-specific setups, such as STAC metadata definitions, making a low-maintenance option like Elasticsearch preferable.

It’s essential to note that the service may experience high request volumes, particularly when used in conjunction with a viewing service like an APEx Dashboard. Consequently, achieving low request latency necessitates a performant database setup.

In addition to the core catalogue components, proper deployment should also include a reverse proxy/API gateway. This component should support advanced configuration features such as caching and rate limiting to optimize service performance.

Authorization within the catalogue service itself is managed by connecting to a Keycloak instance for access token verification. However, it is important to note that the majority of requests are read-only and do not require authorization. Additionally, the integration with an authentication system like Keycloak also facilitates the inclusion of private collections which are accessible only to specific users.

5.4.2 Security considerations

Security fixes need to be applied.

5.5 Operational Management

For the operational deployment of the product catalogue, the APEx project is considering several configurations tailored to different project needs:

- ESA Project Result Repository (PRR): This instance serves as a long-term repository for preserving project results under the auspices of ESA. It is hosted on the ESA Cloud and expects the highest request volume. Unlike other instances, it is not instantiated dynamically due to its long-term nature.

- Project-Specific Catalogue with Shared Database: This option provides a cost-effective solution suitable for most projects, leveraging a shared database instance.

- Project-Specific Catalogue with Isolated Database: Designed for the most demanding projects, this configuration ensures dedicated resources but comes at a higher cost.

These different configurations allow us to offer attractive choices to projects of varying sizes and requirements. As it is challenging to predict the demand for the service, initial deployment may focus on the ESA PRR option alongside a second instance (with a shared database) for testing and storing intermediate project results.

For project-specific catalogues, continuous monitoring of the Kubernetes cluster is essential to maintain optimal performance and availability. Automated maintenance tasks such as updates and backups are routinely performed to minimize downtime and ensure data integrity.